Table of Contents

Katzenpost provides a ready-to-deploy Docker image for developers who need a non-production test environment for developing and testing client applications and server side plugins. By running this image on a single computer, you avoid the need to build and manage a complex multi-node mix net. The image can also be run using Podman

The test mix network includes the following components:

Before running the Katzenpost docker image, make sure that the following software is installed.

-

A Debian GNU Linux or Ubuntu system

-

Docker, Docker Compose, and (optionally) Podman

![[Note]](file:/home/usr/local/Oxygen%20XML%20Editor%2027/frameworks/docbook/css/img/note.png)

Note If both Docker and Podman are present on your system, Katzenpost uses Podman. Podman is a drop-in daemonless equivalent to Docker that does not require superuser privileges to run.

On Debian/Ubuntu, these software requirements can be installed with the following commands (running as superuser). Apt will pull in the needed dependencies.

#apt update#apt install git golang make podman podman-compose

Note: You can also install Docker and docker-compose instead of Podman, but Podman is recommended as it runs rootless by default.

Complete the following procedure to obtain, build, and deploy the Katzenpost test network.

-

Install the Katzenpost code repository, hosted at

https://github.com/katzenpost. The main Katzenpost repository contains code for the server components as well as the docker image. Clone the repository with the following command (your directory location may vary):~$git clone https://github.com/katzenpost/katzenpost.git -

Navigate to the new

katzenpostsubdirectory and ensure that the code is up to date.~$cd katzenpost~/katzenpost$git checkout main~/katzenpost$git pull -

(Optional) Create a development branch and check it out.

~/katzenpost$git checkout -b devel -

(Optional) If you are using Podman, enable the Podman socket service:

-

Enable and start the Podman socket service (as regular user, no superuser privileges needed):

$systemctl --user enable --now podman.socket

![[Note]](file:/home/usr/local/Oxygen%20XML%20Editor%2027/frameworks/docbook/css/img/note.png)

Note Modern Podman automatically handles the DOCKER_HOST environment variable and socket configuration. The Makefile will detect and use Podman automatically.

-

Navigate to katzenpost/docker. The Makefile

contains target operations to create, manage, and test the self-contained Katzenpost

container network. To invoke a target, run a command with the using the following

pattern:

~/katzenpost/docker$maketarget

Running make with no target specified returns a list of available targets.

Table 1. Table 1: Makefile targets

|

[none] |

Display this list of targets. |

|

start |

Run the test network in the background. |

|

stop |

Stop the test network. |

|

wait |

Wait for the test network to have consensus. |

| watch |

Display live log entries until Ctrl-C. |

|

status |

Show test network consensus status. |

|

show-latest-vote |

Show latest consensus vote. |

|

run-ping |

Send a ping over the test network. |

|

clean-bin |

Stop all components and delete binaries. |

|

clean-local |

Stop all components, delete binaries, and delete data. |

|

clean-local-dryrun |

Show what clean-local would delete. |

|

clean |

Same as clean-local, but also

deletes |

The first time that you run make start, the Docker image is downloaded, built, installed, and started. This takes several minutes. When the build is complete, the command exits while the network remains running in the background.

~/katzenpost/docker$ make startSubsequent runs of make start either start or restart the network without building the components from scratch. The exception to this is when you delete any of the Katzenpost binaries (dirauth.alpine, server.alpine, etc.). In that case, make start rebuilds just the parts of the network dependent on the deleted binary. For more information about the files created during the Docker build, see the section called “Network topology and components”.

![[Note]](file:/home/usr/local/Oxygen%20XML%20Editor%2027/frameworks/docbook/css/img/note.png) |

Note |

|---|---|

|

When running make start , be aware of the following considerations:

|

After the make start command exits, the mixnet runs in the background, and you can run make watch to display a live log of the network activity.

~/katzenpost/docker$ make watch

...

<output>

...When installation is complete, the mix servers vote and reach a consensus. You can use the wait target to wait for the mixnet to get consensus and be ready to use. This can also take several minutes:

~/katzenpost/docker$ make wait

...

<output>

...You can confirm that installation and configuration are complete by issuing the status command from the same or another terminal. When the network is ready for use, status begins returning consensus information similar to the following:

~/katzenpost/docker$ make status

...

00:15:15.003 NOTI state: Consensus made for epoch 1851128 with 3/3 signatures: &{Epoch: 1851128 GenesisEpoch: 1851118

...At this point, you should have a locally running mix network. You can test whether it is working correctly by using run-ping, which launches a packet into the network and watches for a successful reply. Run the following command:

~/katzenpost/docker$ make run-pingIf the network is functioning properly, the resulting output contains lines similar to the following:

19:29:53.541 INFO gateway1_client: sending loop decoy

!19:29:54.108 INFO gateway1_client: sending loop decoy

19:29:54.632 INFO gateway1_client: sending loop decoy

19:29:55.160 INFO gateway1_client: sending loop decoy

!19:29:56.071 INFO gateway1_client: sending loop decoy

!19:29:59.173 INFO gateway1_client: sending loop decoy

!Success rate is 100.000000 percent 10/10)

lf run-ping fails to receive a reply, it eventually times out with an error message. If this happens, try the command again.

![[Note]](file:/home/usr/local/Oxygen%20XML%20Editor%2027/frameworks/docbook/css/img/note.png) |

Note |

|---|---|

|

If you attempt use run-ping too quickly after starting the mixnet, and consensus has not been reached, the utility may crash with an error message or hang indefinitely. If this happens, issue (if necessary) a Ctrl-C key sequence to abort, check the consensus status with the status command, and then retry run-ping. |

The mix network continues to run in the terminal where you started it until you issue a Ctrl-C key sequence, or until you issue the following command in another terminal:

~/katzenpost/docker$ make stopWhen you stop the network, the binaries and data are left in place. This allows for a quick restart.

Several command targets can be used to uninstall the Docker image and restore your system to a clean state. The following examples demonstrate the commands and their output.

-

clean-bin

To stop the network and delete the compiled binaries, run the following command:

~/katzenpost/docker$make clean-bin [ -e voting_mixnet ] && cd voting_mixnet && DOCKER_HOST=unix:///run/user/1000/podman/podman.sock docker-compose down --remove-orphans; rm -fv running.stamp Stopping voting_mixnet_auth3_1 ... done Stopping voting_mixnet_servicenode1_1 ... done Stopping voting_mixnet_metrics_1 ... done Stopping voting_mixnet_mix3_1 ... done Stopping voting_mixnet_auth2_1 ... done Stopping voting_mixnet_mix2_1 ... done Stopping voting_mixnet_gateway1_1 ... done Stopping voting_mixnet_auth1_1 ... done Stopping voting_mixnet_mix1_1 ... done Removing voting_mixnet_auth3_1 ... done Removing voting_mixnet_servicenode1_1 ... done Removing voting_mixnet_metrics_1 ... done Removing voting_mixnet_mix3_1 ... done Removing voting_mixnet_auth2_1 ... done Removing voting_mixnet_mix2_1 ... done Removing voting_mixnet_gateway1_1 ... done Removing voting_mixnet_auth1_1 ... done Removing voting_mixnet_mix1_1 ... done removed 'running.stamp' rm -vf ./voting_mixnet/*.alpine removed './voting_mixnet/echo_server.alpine' removed './voting_mixnet/fetch.alpine' removed './voting_mixnet/memspool.alpine' removed './voting_mixnet/panda_server.alpine' removed './voting_mixnet/pigeonhole.alpine' removed './voting_mixnet/ping.alpine' removed './voting_mixnet/reunion_katzenpost_server.alpine' removed './voting_mixnet/server.alpine' removed './voting_mixnet/voting.alpine'This command leaves in place the cryptographic keys, the state data, and the logs.

-

clean-local-dryrun

To diplay a preview of what clean-local would remove, without actually deleting anything, run the following command:

~/katzenpost/docker$make clean-local-dryrun -

clean-local

To delete both compiled binaries and data, run the following command:

~/katzenpost/docker$make clean-local [ -e voting_mixnet ] && cd voting_mixnet && DOCKER_HOST=unix:///run/user/1000/podman/podman.sock docker-compose down --remove-orphans; rm -fv running.stamp Removing voting_mixnet_mix2_1 ... done Removing voting_mixnet_auth1_1 ... done Removing voting_mixnet_auth2_1 ... done Removing voting_mixnet_gateway1_1 ... done Removing voting_mixnet_mix1_1 ... done Removing voting_mixnet_auth3_1 ... done Removing voting_mixnet_mix3_1 ... done Removing voting_mixnet_servicenode1_1 ... done Removing voting_mixnet_metrics_1 ... done removed 'running.stamp' rm -vf ./voting_mixnet/*.alpine removed './voting_mixnet/echo_server.alpine' removed './voting_mixnet/fetch.alpine' removed './voting_mixnet/memspool.alpine' removed './voting_mixnet/panda_server.alpine' removed './voting_mixnet/pigeonhole.alpine' removed './voting_mixnet/reunion_katzenpost_server.alpine' removed './voting_mixnet/server.alpine' removed './voting_mixnet/voting.alpine' git clean -f -x voting_mixnet Removing voting_mixnet/ git status . On branch main Your branch is up to date with 'origin/main'. -

clean

To stop the the network and delete the binaries, the data, and the go_deps image, run the following command as superuser:

~/katzenpost/docker$sudo make clean

The Docker image deploys a working mixnet with all components and component groups needed to perform essential mixnet functions:

-

message mixing (including packet reordering, timing randomization, injection of decoy traffic, obfuscation of senders and receivers, and so on)

-

service provisioning

-

internal authentication and integrity monitoring

-

interfacing with external clients

![[Warning]](file:/home/usr/local/Oxygen%20XML%20Editor%2027/frameworks/docbook/css/img/warning.png) |

Warning |

|---|---|

|

While suited for client development and testing, the test mixnet omits performance and security redundancies. Do not use it in production. |

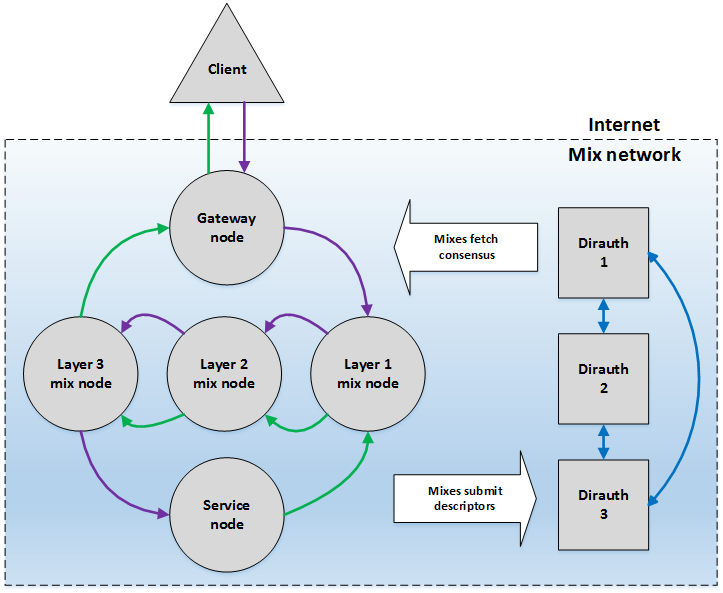

The following diagram illustrates the components and their network interactions. The gray blocks represent nodes, and the arrows represent information transfer.

On the left, the Client transmits a message (shown by purple arrows) through the Gateway node, across three mix node layers, to the Service node. The Service node processes the request and responds with a reply (shown by the green arrows) that traverses the mix node layers before exiting the mixnet via the Gateway node and arriving at the Client.

On the right, directory authorities Dirauth 1, Dirauth 2, and Dirauth 3 provide PKI services. The directory authorities receive mix descriptors from the other nodes, collate these into a consensus document containing validated network status and authentication materials , and make that available to the other nodes.

The elements in the topology diagram map to the mixnet's component nodes as shown in the following table. Note that all nodes share the same IP address (127.0.0.1, i.e., localhost), but are accessed through different ports. Each node type links to additional information in Components and configuration of the Katzenpost mixnet.

Table 2. Table 2: Test mixnet hosts

| Node type | Docker ID | Diagram label | IP address | TCP port |

|---|---|---|---|---|

| auth1 | Dirauth1 |

127.0.0.1 (localhost) |

30001 |

|

|

auth2 |

Dirauth 2 |

30002 |

||

|

auth3 |

Dirauth 3 |

30003 |

||

| Gateway node | gateway1 | Gateway node | 30004 | |

|

servicenode1 |

Service node |

30006 |

||

|

mix1 |

Layer 1 mix node |

30008 |

||

|

mix2 |

Layer 2 mix node |

30010 |

||

|

mix3 |

Layer 3 mix node |

30012 |

The following tree

output shows the location, relative to the katzenpost

repository root, of the files created by the Docker build. During testing and use,

you would normally touch only the TOML configuration file associated with each node,

as highlighted in the listing. For help in understanding these files and a complete

list of configuration options, follow the links in Table 2: Test mixnet

hosts.

katzenpost/docker/voting_mixnet/ |---auth1 | |---authority.toml | |---identity.private.pem | |---identity.public.pem | |---katzenpost.log | |---link.private.pem | |---link.public.pem | |---persistence.db |---auth2 | |---authority.toml | |---identity.private.pem | |---identity.public.pem | |---katzenpost.log | |---link.private.pem | |---link.public.pem | |---persistence.db |---auth3 | |---authority.toml | |---identity.private.pem | |---identity.public.pem | |---katzenpost.log | |---link.private.pem | |---link.public.pem | |---persistence.db |---client | |---client.toml |---client2 | |---client.toml |---dirauth.alpine |---docker-compose.yml |---echo_server.alpine |---fetch.alpine |---gateway1 | |---identity.private.pem | |---identity.public.pem | |---katzenpost.log | |---katzenpost.toml | |---link.private.pem | |---link.public.pem | |---management_sock | |---spool.db | |---users.db |---memspool.alpine |---mix1 | |---identity.private.pem | |---identity.public.pem | |---katzenpost.log | |---katzenpost.toml | |---link.private.pem | |---link.public.pem |---mix2 | |---identity.private.pem | |---identity.public.pem | |---katzenpost.log | |---katzenpost.toml | |---link.private.pem | |---link.public.pem |---mix3 | |---identity.private.pem | |---identity.public.pem | |---katzenpost.log | |---katzenpost.toml | |---link.private.pem | |---link.public.pem |---panda_server.alpine |---pigeonhole.alpine |---ping.alpine |---prometheus.yml |---proxy_client.alpine |---proxy_server.alpine |---running.stamp |---server.alpine |---servicenode1 | |---identity.private.pem | |---identity.public.pem | |---katzenpost.log | |---katzenpost.toml | |---link.private.pem | |---link.public.pem | |---management_sock | |---map.storage | |---memspool.13.log | |---memspool.storage | |---panda.25.log | |---panda.storage | |---pigeonHole.19.log | |---proxy.31.log |---voting_mixnet

Examples of complete TOML configuration files are provided in Appendix: Configuration files from the Docker test mixnet.